How to Talk About AI in Job Interviews

Do's and Don'ts when showcasing your usage of AI

Hey, Prasad here 👋 I’m the voice behind the weekly newsletter “Big Tech Careers.”

This week we share a quick guide on demonstrating your AI-driven productivity in interviews.

If you like the article, click the ❤️ icon. That helps me know you enjoy reading my content.

AI questions have become standard in tech interview loops. Not because companies expect you to be an AI engineer. Because they want to know if you’ve adapted to how work is changing.

The problem: most candidates either undersell (”I use ChatGPT sometimes”) or overclaim (”I’m basically an AI expert”).

Both hurt you. Underselling makes you seem behind. Overclaiming invites follow-up questions you can’t answer.

This guide gives you what you need: what interviewers actually assess, how to answer at the right level for your role, and what to say if you haven’t used AI much.

Vamsi and I are hosting a FREE lightening talk on Friday, January 16th where we will dive deep into strategies for preparing for big tech interviews and have special offer for you to try the Revarta Platform.

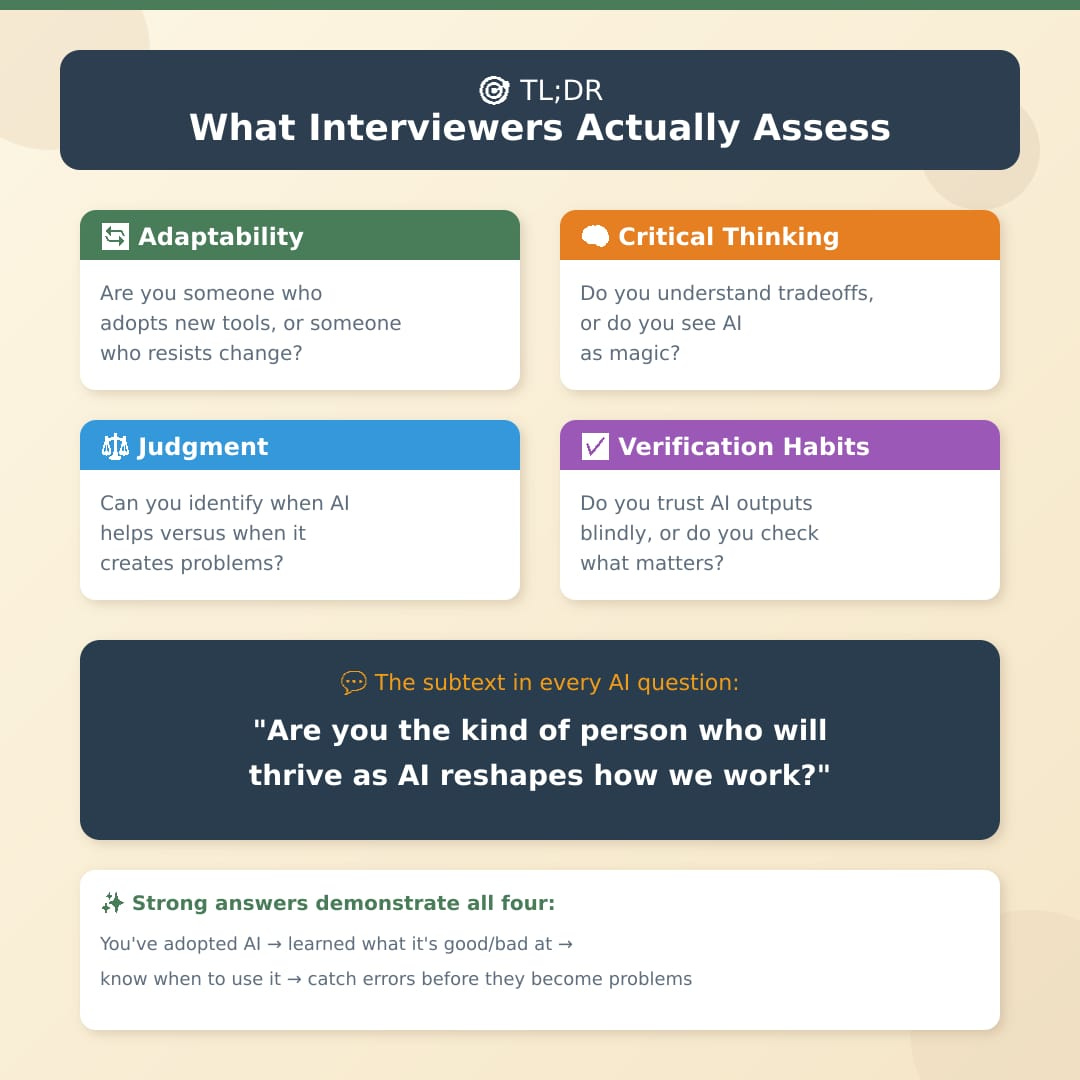

What Interviewers Actually Assess

When interviewers ask about AI, they’re evaluating four things:

Adaptability: Are you someone who adopts new tools, or someone who resists change?

Critical thinking: Do you understand tradeoffs, or do you see AI as magic?

Judgment: Can you identify when AI helps versus when it creates problems?

Verification habits: Do you trust AI outputs blindly, or do you check what matters?

The subtext in every AI question: Are you the kind of person who will thrive as AI reshapes how we work?

Strong answers demonstrate all four. You’ve adopted AI (adaptability), you’ve learned what it’s good and bad at (critical thinking), you know when to use it and when not to (judgment), and you catch errors before they become problems (verification).

Example that hits all four: “I use AI to accelerate research and first drafts. What took 4 hours now takes 45 minutes. But I learned early that it’s confidently wrong about 15% of the time, so I built a verification step into my process. Now I know which outputs I can trust and which need checking. The biggest shift was realizing that how I structure my requests matters more than which tool I use.”

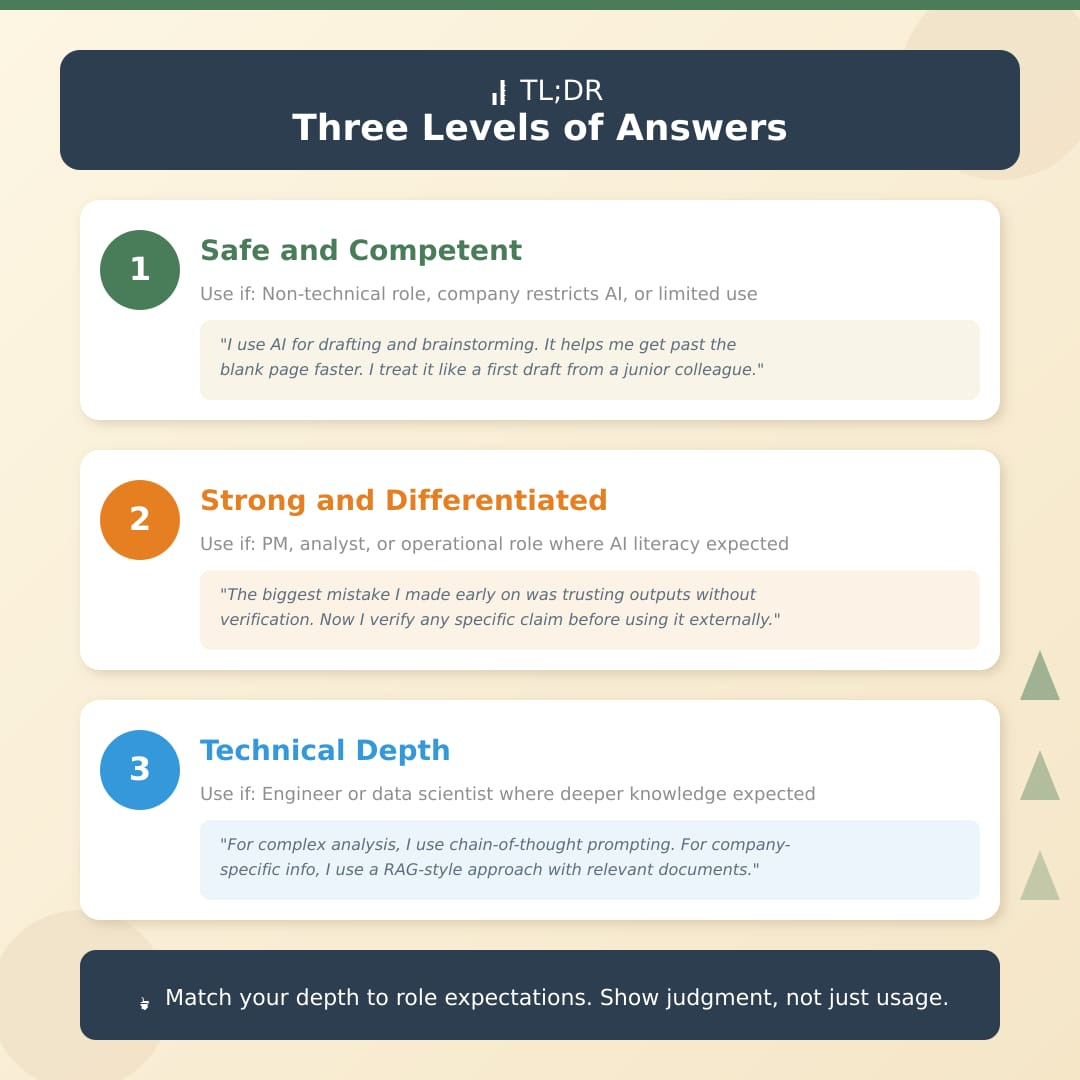

Three Levels of Answers

Not every role requires the same depth. Here’s how to calibrate.

Level 1: Safe and Competent

Use this if: You’re in a non-technical role, your company restricts AI, or you haven’t used it extensively.

Template: “I use AI for [1-2 specific tasks]. What I’ve learned is that it’s helpful for [type of work] but I always [verification step]. I’m continuing to explore [area you’re developing].”

Example: “I use AI mainly for drafting and brainstorming. It helps me get past the blank page faster. What I’ve learned is that I have to edit heavily. The AI writes generically, and my job is to add specifics and judgment. I treat it like a first draft from a junior colleague.”

Level 2: Strong and Differentiated

Use this if: You’re a PM, analyst, or operational role where AI literacy is expected.

Template: “I use AI for [specific tasks] with a deliberate process. The biggest mistake I made early on was [learning moment]. Now I [improved approach].”

Example: “I use AI for research synthesis and first drafts. The biggest mistake I made early on was trusting outputs without verification. I cited a fabricated statistic in a presentation. Now I verify any specific claim before using it externally. What I’ve learned is that how I frame the request matters more than which tool I use.”

Level 3: Technical Depth

Use this if: You’re an engineer or data scientist where deeper knowledge is expected.

Example: “I use different approaches depending on the task. For complex analysis, I use chain-of-thought prompting, asking the model to reason step by step. For company-specific information, I use a RAG-style approach, retrieving relevant documents as context rather than relying on training data. For any workflow I depend on, I build basic evaluations to measure whether my approach actually works.”

Role-Specific Guidance

Engineers/Architects

Show technical understanding and disciplined verification.

“I use Copilot daily, but with guardrails. It’s excellent for boilerplate and test scaffolding. For complex logic, I treat suggestions like code review comments—potentially useful, but requiring evaluation. I never commit code I can’t explain.”

Product Managers

Demonstrate that you use AI to accelerate PM work (research, specs, analysis) while thinking critically about limitations.

“As a PM, I use AI most heavily in discovery—synthesizing research, analyzing competitors, drafting specs. Where I don’t use it: prioritization decisions and stakeholder communications where nuance matters. What I’ve learned about AI products: impressive demos don’t equal reliable production use. When evaluating AI features, I focus on failure modes.”

Analysts

Emphasize verification habits, because analysis errors have consequences.

“I use AI to accelerate exploratory analysis—cleaning scripts, segmentation approaches, pattern identification. What I’ve learned: AI finds patterns but can’t determine which patterns matter. That requires business context. For any finding I plan to share, I trace back to raw data manually.”

Marketing / Non-Technical

Show adoption without naivety.

“I use AI for first drafts and ideation. It expands the options I consider. What I’ve learned: AI writes generically. The value I add is voice, specificity, and judgment about what resonates with our audience. I’m careful about facts—AI confidently makes things up.”

What to Say If You Haven’t Used AI Much

Maybe your company restricts AI tools. Maybe you haven’t had good use cases. Don’t panic.

Template: “I haven’t used AI extensively because [honest reason]. But I’ve [steps to learn]. My impression is [thoughtful observation].”

Example: “My current company has strict AI policies because of client data concerns. But I’ve experimented personally. I used AI to help analyze a home renovation project. My impression: it’s powerful for structuring information but requires verification and judgment. I’m curious what applications have worked well in this role.”

This is honest without being defensive. It shows intellectual engagement despite limited use.

Common Mistakes

Overclaiming: “I’m basically an AI expert” invites follow-ups you can’t answer. Be precise: “I’m a regular user with a reliable process” is credible.

Underselling: “I just use ChatGPT sometimes” makes you seem behind. Even modest use can sound thoughtful: “I’ve learned that quality depends on how specific I am about what I need.”

Making it your whole identity: You’re interviewing for PM or Engineer, not “AI User.” Weave AI naturally into stories. Lead with the business problem and outcome, not the tool.

Ignoring limitations: “AI handles everything” signals you haven’t used it seriously. Showing limitations builds credibility: “I learned to verify Y because it makes confident errors.”

Quick Reference

Before your interview, prepare:

One specific example of effective AI use (with outcome)

One thing you learned about AI’s limitations

Your answer for “How do you verify AI outputs?”

In the interview:

Match depth to role expectations

Show judgment, not just usage

Be honest about what you don’t know

Lead with business impact, not the tool

The goal isn’t to sound like an AI expert. It’s to sound like someone who uses tools thoughtfully, learns from experience, and adapts as technology changes.

That’s what interviewers are actually assessing.

I would like to extend a big thank you to Vamsi Narla for sharing his insights with Big Tech Careers readers.

Vamsi is the founder of Revarta, an AI-powered interview practice platform. He’s conducted over 1,000 interviews at Amazon, Google, Nvidia, Adobe, and Remitly.

We are hosting a FREE lightening talk on Friday, January 16th where we will dive deep into strategies for preparing for big tech interviews and have special offer for you to try the Revarta Platform.