How to Transition from a Developer or Architect to AI FDE roles

The Knowledge Gap (It’s Smaller Than You Think)

Hey, Prasad here 👋 I’m the voice behind the weekly newsletter “Big Tech Careers.”

In this week’s article, I share how to use your existing skills and gain new skills to transition to an AI Forward Deployed Engineers (FDE) role.

If you like the article, click the ❤️ icon. That helps me know you enjoy reading my content.

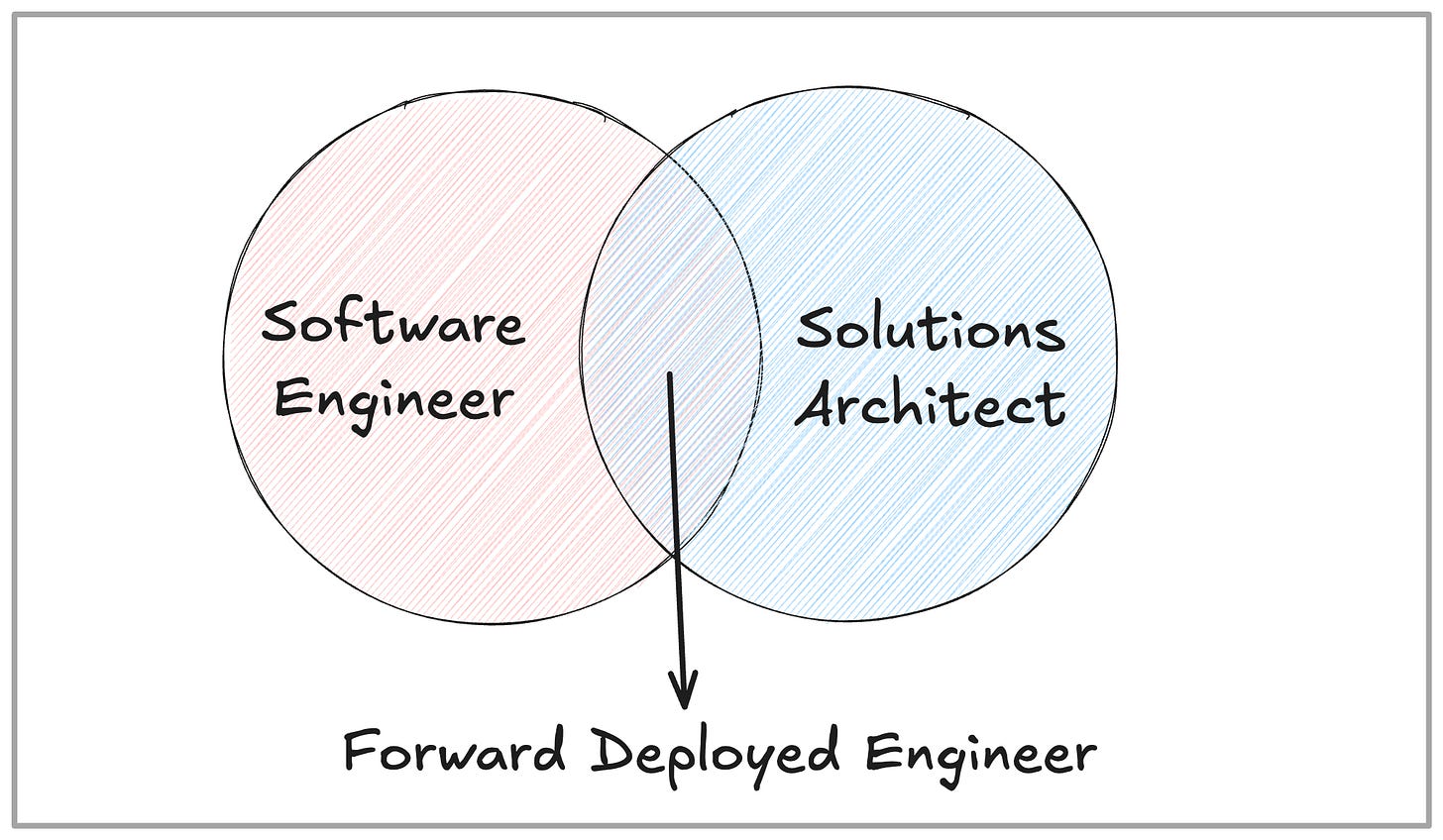

Last quarter, I wrote an article on how the Forward Deployed Engineer (FDE) role represents a unique intersection between Software Engineers (SWE) and Solutions Architects (SA), a hybrid discipline combining technical depth with customer facing consulting skills.

In this article, I’ll address how SA/SWE professionals are uniquely positioned to excel in this emerging AI FDE space. It's not just about learning LLMs or Agentic AI. Frankly, that’s the easy part and can be done anyone. It's about translating the strengths that made you successful in your existing roles into a new domain.

Understanding the Forward Deployed Engineer

Forward Deployed Engineers are technical professionals who work directly at client sites or within specific business units, serving as the crucial link between engineering teams and end-users.

Unlike traditional engineers who primarily work within the confines of their own organization’s development environment, FDEs embed themselves in the operational context where technology meets real-world challenges.

Also, the FDE role represents a significant evolution from traditional customer-facing SA roles.

FDEs maintain a continuous, hands-on presence throughout the entire customer lifecycle. They don't just design solutions or oversee implementations. They actively participate in day-to-day operations, troubleshoot issues in real-time, and evolve systems based on actual usage patterns.

FDEs implement solutions using existing product capabilities, identify limitations or opportunities during deployment, and then contribute code and features back to the core product to address these gaps. They serve as both the implementer and the product developer, ensuring that products evolve in direct response to real-world customer needs rather than theoretical requirements.

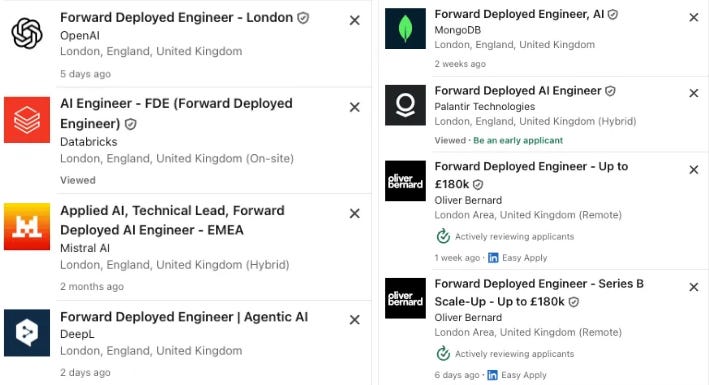

The Rise of AI FDE roles

A LinkedIn search for AI FDE roles shows hundreds of roles in the London area alone from companies of all sizes.

Enterprise AI deployment is a $200B+ opportunity, yet majority of pilot projects stall at production. Why? Because shipping AI to production is not about model accuracy. It’s the same skill set that define the traditional role that ship software to production. That’s where roles of SA and SWE shine and FDE helps in closing the gap in AI world.

Understanding customer reality (SA strength): Knowing why a customer wants AI, not just how to build it

Shipping under constraints (SWE strength): Building RAG pipelines, agents, and APIs that actually work in production

Bridging the gap (FDE strength): Translating ML capabilities into business language and managing expectations when demos look flashy but production is messy

The best AI FDEs we’re seeing are not AI researchers but they’re SA/SWE hybrids who learned the fundamentals of AI. That’s probably you!

The Strengths You Already Have (And How to Leverage Them)

If You’re an SA

Your superpower: Understanding customer ecosystems, discovering hidden requirements, and turning vague business pain into technical specs.

In the AI world: This becomes use case discovery. Instead of “build a CRM integration,” you’re asking: “Should we use RAG, fine-tuning, or agents? What data quality do we have? What’s the cost-accuracy tradeoff?”

Translate this by learning:

LLM fundamentals (tokenization, context windows, temperature): The technical language of AI products

RAG architecture: Why retrieval beats fine-tuning 80% of the time; how chunking and embeddings work

Cost/latency tradeoffs: How to explain why a customer’s “perfect AI solution” needs 3 versions (fast/cheap, accurate/slow, balanced)

Your unfair advantage: You already know how to ask discovery questions. Most AI projects fail at discovery, not implementation.

If You’re an SWE

Your superpower: Shipping code under pressure, debugging production chaos, and building systems that scale.

In the AI world: This becomes production deployment. You’re building the infrastructure that keeps RAG pipelines, agents, and LLM services running at 99.9% uptime.

Translate this by learning:

LLM APIs and libraries (OpenAI, Anthropic, LangChain): The frameworks for building with AI

Vector databases and embeddings: The new infrastructure layer (Pinecone, FAISS, Weaviate)

Deployment patterns: FastAPI + Docker + monitoring for LLM services (you’ve done this with REST APIs—LLMs just add complexity)

Observability for AI: Tracking token costs, latency, and “hallucination” rates (your logging skills transfer directly)

Your unfair advantage: You understand production. You’ve debugged 3am outages. Most AI prototypes are still struggling with “why does the demo work but production fails?”. That’s your bread and butter.

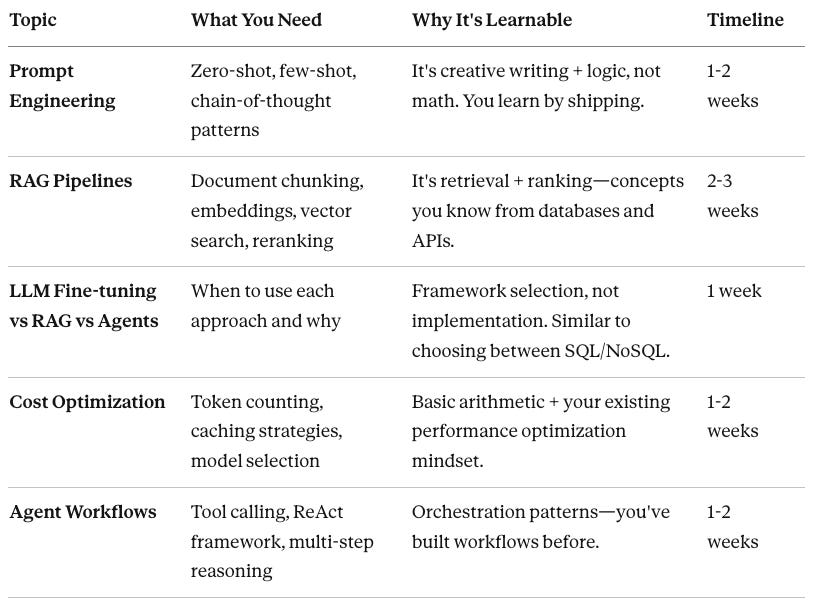

The Knowledge Gap (It’s Smaller Than You Think)

Here’s what you do need to learn, and why it’s not as daunting as it seems:

Total ramp time: With to 5-8 weeks for dedicated ramp up you will be unstoppable.

What You Already Know That Transfers Directly

Your existing skills are more valuable in AI FDE roles:

Discovery & Listening → Identifying which LLM use case actually solves the customer’s problem

System Design → Designing RAG systems that handle 10M enterprise documents

Debugging Production Issues → Figuring out why the customer’s AI feature returns garbage

Cost Optimization → Understanding why token costs explode at scale

Stakeholder Management → Explaining why fine-tuning isn’t the answer to every problem

Documentation → Writing runbooks that help customers operate AI systems independently

Shipping Under Pressure → Prioritizing between accuracy, speed, and cost in 2-week sprints

These aren’t “nice-to-have” skills in AI FDE. They’re the primary differentiators. And you already have them. You just need to map them to AI Domain.

Your Learning Path

For AI FDE roles, you don’t need to go deep on ML theory. Go wide on understanding the AI fundamentals and shipping AI.

Timelines for each stage might very depending on your specific situation. Each Stage can be a week or month or more. But it’s definitely doable with consistent efforts.

Stage 1: AI Foundations + First Shipped Demo

Spend 3-4 days on LLM fundamentals (tokenization, APIs, prompt patterns)

Build a working chatbot (Streamlit + OpenAI) in a weekend

Deploy it somewhere (Vercel, Render) and add monitoring

Outcome: Your first “production AI” app (even if it’s simple)

Stage 2: RAG Deep Dive + Enterprise Architecture

Learn embeddings, vector databases, and retrieval patterns

Build a RAG system on real documents (PDFs, Notion, Slack exports)

Deploy with FastAPI + authentication + cost tracking

Outcome: You understand why RAG is the workhorse of enterprise AI

Stage 3: Agents + Customer Handoff

Learn agent frameworks (LangChain agents, ReAct)

Build an agent that integrates with a real API (Salesforce mock, Stripe, etc.)

Write a complete handoff package (runbooks, troubleshooting, training)

Outcome: You can build and hand off complex AI systems

Stage 4: Portfolio + Interview Prep

Package your 3 projects into a case study portfolio

Practice technical interviews (system design + field scenarios)

Tailor your resume: emphasize discovery, production debugging, cross-team communication

Outcome: Interview-ready for AI FDE roles!

The Behavioral Edge That Actually Matters

Here’s what separates the top 10% of AI FDEs from the rest:

Discovery mastery: You ask the right questions before coding. “What’s your data quality?” “Who owns this decision?” “What does success look like in 6 months?”

Bias toward shipping: You know that a working prototype beats a perfect design doc. You get feedback in production and iterate.

Production paranoia: You ask the questions most engineers don’t: What happens when the API fails? How do we monitor for hallucinations? What’s our rollback plan?

Stakeholder translation: You explain technical tradeoffs in business language. “We could use fine-tuning, but that costs $50K upfront and takes 6 weeks. RAG is ready in 2 weeks for $2K/month and we can iterate faster.”

Expectations management: You know that AI demos look perfect, but production is messy. You set realistic timelines and deliver on them.

These aren’t skills you need to learn. You already have them. You just need to apply them to AI.

The Honest Truth About the Transition

Getting into AI is not a career restart. It’s a lateral move using your existing strengths in a new domain.

You won’t be starting from zero. Your discovery skills, production debugging skills, and stakeholder management will get you there in 3-6 months.

The knowledge gaps are real but narrow. LLMs are new, but the patterns—retrieval, caching, observability, authentication—are old. You know these.

Your SA/SWE background is your competitive advantage. The market is hot for people who understand production, customers, and complexity. The only thing missing is a little bit of AI fluency.

Enjoyed reading the article? You might also enjoy the most popular posts from Big Tech Careers:

You’re Doing STAR Format Answers Wrong. Here’s How to Do It the Right Way

The One-Minute Elevator Pitch That Will Transform Your Interview Introduction

The Complete Blueprint for Using AI in Your Interview Process

But how is the different from Staff Engineer stream of roles ?

I feel there is much of overlap between Staff and FDE role.

Thanks for a well detailed post..would appreciate if you could do a follow up post on the positioning for AI FDE roles in terms of resume and interview preparation